Update:

I just noticed that in order for this to work, you do need to enable the workaround for unsupported network devices. I guess the recovery process works better with this solution, however, because I'm using AFP file sharing, rather than SMB that I was using on the Buffalo NAS.

Enable unsupported devices on your Time Machine client by running this command in a terminal:

defaults write com.apple.systempreferences TMShowUnsupportedNetworkVolumes 1

OK... on with the blog...

The Problem

For some time now, I've been thinking about a better backup solution at home. For a few years, I've been using a Buffalo TeraStation NAS, which although it does the job, is painfully slow... at least in the RAID-5 mode that I've been using.

When I finally upgraded to Mac OS Leopard, I was determined to make Time Machine work with the Buffalo NAS, which is not supported for use by Time Machine. After a bit of googling, I found that that there was a solution: after jumping through some hoops by creating a sparsebundle filesystem, making sure it's named properly, moving it to the NAS, and then changing some Mac OS system preferences on the command line, like magic, Time Machine can now make use of the NAS.

There are issues with this hack, however; and I recently found a big one when my Macbook suffered a hard drive failure and I had to replace the disk. Once I had the new disk in, I booted from the Mac OS install DVD and proceeded to attempt to restore from my Time Macine backup only to come to a harsh realization: this "unsupported NAS" hack doesn't work out of the box, meaning the system installer can't detect my backup archive out on the network. Oops. After a bit of fretting, I was able to copy the sparsebundle from the NAS to a USB hard drive. This was unbearably slow. Hours. Misery. But in the end, it worked... I was able to restore my system from the USB drive with no problems.

This experience taught me 2 things: First, that Time Machine is a friggin' awesome backup app. The restore process (once I got access to my backup) was smooth as silk, and a stunningly easy way to restore the system to a new disk. Once the restore process completed, I rebooted and it was like nothing ever happened. Magic.

The second thing I learned was that my NAS had to go. I wanted to replace it with a new solution that was hopefully much faster, and more importantly, *natively* supported by Time Machine.

The obvious choice would be the Apple Time Capsule. But, as a system administrator, and someone who has had a hard disk failure in just about every computer I've ever owned over the past decade, I have one major issue with the Time Capsule: it only has 1 disk spindle. I don't give a rat's ass if it's a (ahem) "Server Grade" hard drive that they're putting in there, it's still a single disk, and it's bound to fail eventually. Guaranteed. Just a matter of when. No... I want my backup solution to use multiple spindles, preferably in a RAID-5 configuration.

The Solution

Basically, this is what I cobbled together:

- (1) Refurbished Mac Mini (2.0GHz CPU, 1GB RAM, made sure it had the FireWire 800 port) from the Apple Store refurb section

- (1) qBOX-SF (4-bay, FireWire 800, disk chassis to be used in JBOD mode) from DAT Optic - UPDATE: qBOX-SF can apparently be found here now

- (4) Seagate Barracuda ES.2 750-GB SATA disk drives

For this project, I also decided that if at all possible, I was going to use Sun's ZFS filesystem, as it has some really nice features, like ease of administration, data integrity checks, snapshots, compression, etc, etc. Unfortunately, the ZFS implementation in Mac OS Leopard is read-only. Fortunately, there is a ZFS port that provide read-write capabilities that the Apple developer's were kind enough to post at MacOS Forge. If you want to take this route, the necessary binaries - along with instructions to install - can be had from that site. Plus, ZFS would let me use RAID-Z, which is sort of like RAID-5, only better.

The qBOX-SF chassis uses 2 separate controller boards, each controls 2 disk, and has 2 FW800 ports. It also comes with a short FW800 daisy-chain cable to connect the two boards onto a single bus. Out of the box, the controllers are configured for JBOD mode - which is exactly what I wanted - but if you are so inclined, you can fiddle with some jumpers on the boards to configure each pair of disks in either a RAID-0 (stripe) or RAID-1 (mirror) volume at the hardware level. Whatever floats your boat. In JBOD mode, we're using "Just a Bunch Of Disks", and giving them all to ZFS on the Mac to manage. Daisy-chain the two controllers, and then plug one into the Mac, and now the Mac will see all 4 disks individually.

I'm not going to go much detail on ZFS - there are other places on the interweb for you do learn that. But in a nutshell, here's the terminal commands used on the Mac Mini to set up the ZFS pool (you need to be root on the Mac, i.e. 'sudo su -'). The following command created a new ZFS pool called "tank" (yes, I used "tank"... the same name used in just about every documented ZFS tutorial... I wasn't feeling to creative... DON'T YOU JUDGE ME!). Besides, it's easy to rename a pool in ZFS after it is created.

Anyway, this creates the pool called "tank", in RAID-Z mode, and gives it the four new disks (whole disks, not just a partition):

# zpool create tank raidz disk1 disk2 disk3 disk4

Note: with four 750 GB disks, RAID-Z gave me a 2.0 TB pool.

If you were so inclined, you could just leave it at that if you wanted to. The pool automatically gets imported and mounted on the Mac as /Volumes/tank . You could start dumping your files right in there if you want. However, I wanted a bit more fine-grained control over different shares, compression, quotas, etc, so I created a ZFS filesystem within that pool:

# zfs create -o compression=lzjb tank/compress

This creates (and mounts) a new filesystem at /Volumes/tank/compress, and enables lzjb compression on it (gzip is not available yet on the Mac port of ZFS). There is currently a problem with ZFS filesystems showing up in the Mac OS Finder as symlinks, so to avoid runing into any problems with this, I created a new directory within "compress" that I will actually share over the network for Time Machine:

# mkdir /Volumes/tank/compress/Time_Machine

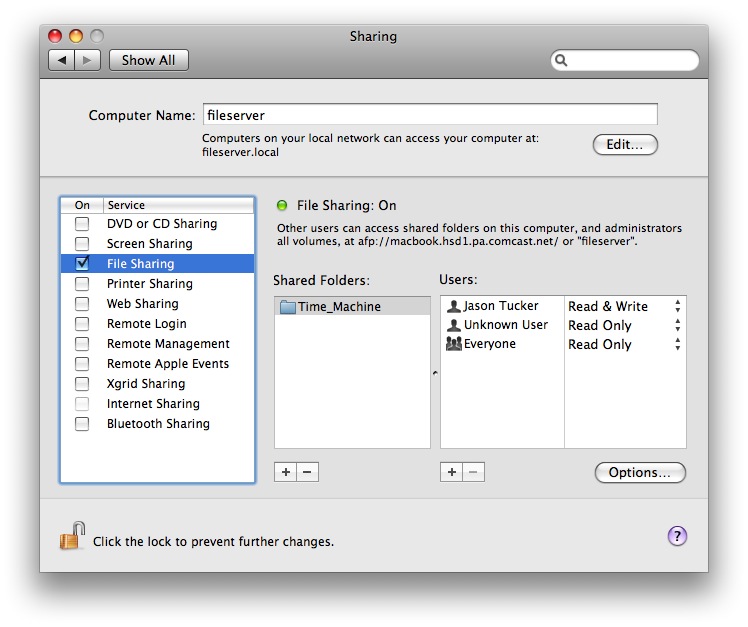

OK, at this point, you can enable file sharing in the "Sharing" system preference pane, and add this new directory to the list of folders to share. Be sure to check your permissions of who can access what on the share.

After mounting the shared volume, the other Time Machine clients on your network will be able to uses this shared volume for their backups (see update at top for unsupported devices). You will probably need to authenticate the first time you mount it, but after that Time Machine will automatically mount the volume as needed to do its incremental backups.

After mounting the shared volume, the other Time Machine clients on your network will be able to uses this shared volume for their backups (see update at top for unsupported devices). You will probably need to authenticate the first time you mount it, but after that Time Machine will automatically mount the volume as needed to do its incremental backups.So... Does It Work?

Yup! And it seems about twice as fast as the old NAS solution I was using.

After doing a full backup, one of the first things I tried was booting off my Panther install DVD and doing a restoration dry run just to see if I could recover my system in the event of another drive failure. Happily, it worked as I had hoped!

I strongly suggest everyone else test this out for themselves, just to make sure there are no surprises later on when you really need this to work. In fact, I've gotten into the habit of creating two partitions on my system disks - one to use day-to-day, and one to use for testing things like this. If you have the disk space to spare, having a test partition like this to play around with comes in handy.

The actual restore process is fairly straight forward, with only a couple extra steps necessary for it to work off of a network share. Once you boot off the install DVD, the installer application launches, and you pick a language, you will then be able to perform the following 3 steps:

- Configure your network settings.

- Manually mount your backup share.

- Start the restore process.

For step #2, you will need to open a terminal (from the Utilities menu). At the prompt, the following commands will mount your shared volume:

# mkdir /Volumes/Time_Machine

# mount_afp afp://username:password@server.address/Time_Machine /Volumes/Time_Machine

Obviously, you will need to use whatever username and password you have set up for the shared volume, along with the address of your server. Once the volume mounts, you can exit the terminal app.

At this point, you should be able to select the option to restore from backup from the Utilities menu, and just follow the prompts.

NOTE: One odd thing I've noticed is that occasionally, the tools under the Utilities menu get "greyed out" and can't be selected for some reason. If this happens, all you need to do to fix it is go back to the installer screen and click the "back" button until you are back at the language selection screen, and then start it over. You're network settings and mounts should remain intact. A minor nuisance, it seems.

Monitoring

Having redundant disks doesn't do squat for you if they silently fail and you have no idea there is a problem. Single-parity RAID-Z will survive the failure of one disk, but if a second one fails, you're pool is not usable. So, as an effort to help monitor the health of the pool, I've written a perl script that can be set up as a cron job to run automatically, and email the results to you.

This script needs to be run from root's crontab. I'm running it once a day, and also automatically at system boot. The crontab looks like this:#!/usr/bin/perl

#

# zfscheck.pl

#

# Intended to be used as an automated cron job to periodically

# check the health of ZFS pools, and send the result as an email.

# Tested on Mac OS 10.5.6.

# Needs to be run in root's crontab.

use strict;

use Net::SMTP;

#### START CONFIG SECTION

my $server='smtp.domain.com'; # change to your SMTP server

my $from='someone@domain.com'; # Sender address

my $to='someone@domain.com'; # Recipient address

#### END CONFIGS

my @line;

my @zpool;

my @zfs;

my $status='ERROR DETECTED'; # assume an error unless zpool tells otherwise

my $control=0;

open (ZPOOL, "/usr/sbin/zpool list |");

while (<ZPOOL>) {

chomp;

push (@zpool,$_);

if ($control > 0) {

@line=split;

if ($line[5] eq 'ONLINE') {

$status='OK';

}

}

$control++;

}

close ZPOOL;

open (ZFS, "/usr/sbin/zfs list |");

while (<ZFS>) {

chomp;

push (@zfs,$_);

}

close ZFS;

my $subject="ZFS Status: $status";

my $zpool_head="ZPOOL STATUS:\n\n";

my $zfs_head="\n\nZFS STATUS:\n\n";

my $smtp = Net::SMTP->new("$server") or die $!;

$smtp->mail( $from );

$smtp->to( $to );

$smtp->data();

$smtp->datasend("To: $to\n");

$smtp->datasend("From: $from\n");

$smtp->datasend("Subject: $subject\n");

$smtp->datasend("Content-Type: text/html; charset=ISO-8859-1\n");

$smtp->datasend("Content-Transfer-Encoding: 7bit\n");

$smtp->datasend("\n"); # done with header

$smtp->datasend("<html><body><pre>");

$smtp->datasend($zpool_head);

foreach (@zpool) {

$smtp->datasend("$_\n");

}

$smtp->datasend($zfs_head);

foreach (@zfs) {

$smtp->datasend("$_\n");

}

$smtp->datasend("</pre></body></html>");

$smtp->dataend();

$smtp->quit();

# crontab -u root -l

@reboot /usr/local/bin/zfscheck.pl

@daily /usr/local/bin/zfscheck.pl

Caveats

I've only done this with Mac OS Leopard (10.5.x)

I have no idea what the Snow Leopard release is going to bring. Full ZFS support was supposed to be added to Snow Leopard (Server, at least), but lately it seems that Apple may not be delivering that. I don't think anyone will know for sure until that release debuts.

If Snow Leopard does manage get proper ZFS support, the good news is that it should just work with the pool we've already created here. ZFS pools created on one system *should* be able to be imported on any other system that uses ZFS - even different OSes.

If Snow Leopard doesn't have full ZFS, will the MacOS Forge zfs build work? I haven't the foggiest idea. That would be a good thing to test out with that test partition I mentioned earlier, though.

Also, this probably goes without saying, but I'll say it anyway... you can't use Panther on your backup server. Time Machine can only backup to Leopard (or presumably versions after Leopard).

ZFS Pools Using Whole Disks

In my example, I chose to give ZFS the entire disk (disk1, disk2, etc) rather than a slice - or partition - on a disk (disk1s2, disk2s2, etc). There are a couple issues with this, which I felt were pretty minor. First, when the Mac boots up, the FireWire is connected, or the sytem otherwise detects the presence of the disks, you will get a series of pop-ups (one for each disk) that an unknown disk has been inserted, and asking you what to do about it. I just ignore these.

The second issue I've seen with whole-disk pools is that the current Mac implementation can not import these automatically on system boot. In other words, when the system starts up, the pool will not mount. In my case, I really didn't care much about this and I'm using this as a fileserver that will constantly stay up, except for maintenance. The process of manually importing the pool is simple enough, however:

# zpool import tank

ZFS UI integration, features

While the core ZFS functionality is there, some the bells and whistles are missing from the current (zfs-119) build available from MacOS Forge. For instance, compression is there, but only LZJB, not gzip. There is also an issue where ZFS child filesystems show up in the Finder as links rather than separate filesystems or even directories. The Finder can still browse down into these filesystems though. And I've already mentioned the issues with whole-disk zpools above.

You can also check out the list of issues over at MacOS Forge.

ZFS not browseable locally by Time Machine

This sort of scared me at first. When I first started playing around with ZFS on the Mac, I fired up Time Machine on the same machine that was hosting the ZFS pool, and tried to back up to it. This doesn't work. You can probably hack it a bit to get it to use it as an "unsupported" device, but I wouldn't recommend this for the same reason I mention up at the top of this blog post.

Fortunately, when I tried sharing the ZFS volume over the network, I found that the clients *can* use it with Time Machine OK. Whew!

In my case, this isn't a problem, because I don't care if the OS disk of the backup server itself gets backed up or not. I'm not loading anything special or irreplaceable on it. If the server's OS disk gets cooked, I'll just reinstall Leopard fresh, and reapply the ZFS code bits. Maybe not as convenient as a Time Machine restore, but certainly nothing to lose sleep over.

Headless Mini

I guess this isn't really a caveat, but more of a tip. In my environment, I'm running my Mac Mini headless - that is, without a monitor attached. I don't even own an external monitor anymore, unless you count my TV. If you also want to run the Mini headless, here's what you need to do to set it up.

First, you need a USB keyboard. Any old keyboard should do. Connect the keyboard to the Mac, and turn on the power while holding down the "T" key. This will place the Mac into "target disk mode", which just means that you can use it just like any other external FireWire disk. FireWire is important here... target disk mode does not work over USB. Anyway, once in target disk mode, cable the Mini to your Macbook, and then on the Macbook, tell it to use the Mini as it's startup disk (in System Preferences) and then reboot. When the system comes back up, it will be running the OS that's loaded on the Mini, and you can configure it however you want. The key things to set up at this point will be networking (give the Mini a static IP on your network so you can easily find it remotely) and Screen Sharing. I also enabled Remote Login so that I could access the Mini via SSH for remote command-line administration.

Once the OS on the Mini is set up to your liking, shut down, disconnect the FW to your Macbook, remove the keyboard (if still present), wire it up to your network, and power it on. Once the system boots, you will be able to connect to it via screen sharing, or alternately, via SSH. I wish I could do everything via SSH, but some things - like configuring sharing - I've not been able to figure out how to do from the command line. All ZFS admin can be done from the command line, however.

Last But Not Least...

Time will tell, but I'm hoping this is a reliable solution, but I'm not sure if any backup system is perfect. Never put all your eggs in one basket. If your data is hypercritical you would be wise to keep multiple copies. If you really can't afford to lose the data, you might want to consider keeping additional copies on something like tapes, and storing copies of those tapes in a safe deposit box.